How do the different automatic transcription services compare in accuracy? And are any of them good enough for your audio?

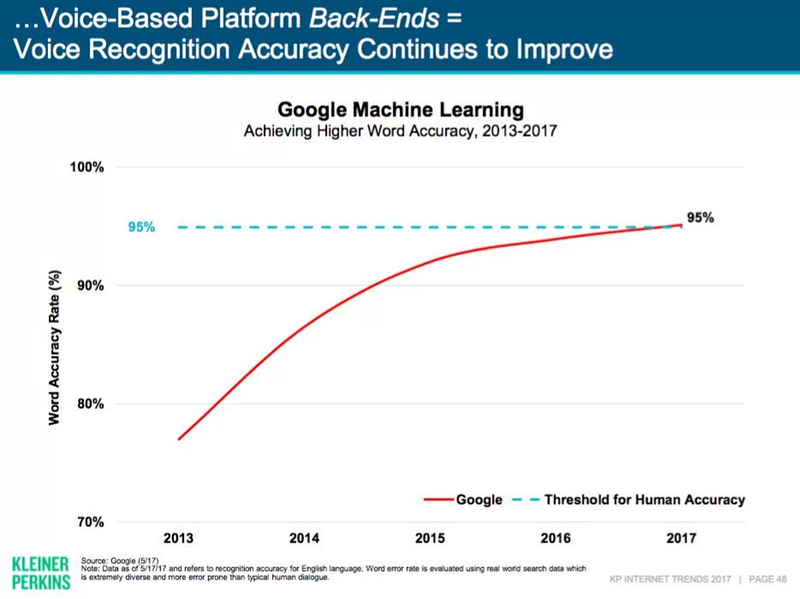

In the last few years, automatic speech recognition has gotten really good. Computer models now understand speech as well as humans (with the right type of audio).

It costs $1+ per minute get something hand-transcribed, so automation makes transcription affordable for all types of applications that couldn’t otherwise justify the cost. Plus, it’s way faster — e.g. the median time it takes Descript to transcribe a 60 minute file is three minutes.

A slew of companies now offer automatic transcription service, and this article at Poynter by Ren LaForme (published before Descript launched) is a good overview of the field.

But there’s still a lot of mystery around transcription accuracy, so we thought we’d share some of what we learned and offer a deeper comparison.

How we tested

First, we picked eleven audio files, each about ten minutes long, that are representative of the types of things we’ve been seeing transcribed during our beta.

Then we ran them through several transcription services: Descript, Happyscribe, Temi, and Trint. We also did a hand-transcription as a reference.

Finally, we compared the hand transcription to the automatic transcription using Copyscape, the same service used in the Poynter article.

The results

Descript was the most accurate transcription service for eight of the ten test files, tied on two, and lost (by 1%) on one. Descript’s average accuracy was 93.3%. Temi came in second at 88.3%, Trint third at 87.4%, and Happyscribe fourth at 86.6%. So for these files, Descript makes about half as many errors as the second best option.

Why is Descript so good?

Here’s the catch: Almost all of the automatic transcription services out there are powered by an API provided by a big tech company. Descript uses Google. The other popular ones are IBM Watson, Speechmatics, Nuance, Microsoft. Amazon just launched one, too.

I’m not sure what the other services in this post are using, but if they aren’t using one of the big APIs, I’d recommend they consider it. The big companies have a major advantage: access to a huge amount of the training audio necessary to build a great speech model. Google has massive audio content repositories like YouTube, which is a big part of why we found them to be more accurate than the others.

With so many smart companies focused on automatic transcription, it’ll just keep getting better and cheaper. As for Descript, our strategy is to be API agnostic — and focus on building the instrumentation to evaluate and choose the best technology available for your audio, including, when it’s called for, human-powered transcription (more on that below).

Accurate transcription is crucial, but it’s just the starting point for Descript — our real technological superpower is what you can do with Descript after you have a transcript: edit audio with the ease of using a word processor.

Caveats

- Internally, we typically use a metric called Word Error Rate (WER) for measuring accuracy. We used Copyscape for this test because it’s consistently within 1% of WER, and it’s a free service anyone can use.

- WER (and Copyscape) are nice because they’re quantifiable, but they’re imperfect metrics. Not all word errors are equal, some affect comprehension more than others. And is comprehension the goal, or is it complete verbatim accuracy, including every stutter and false start?

- The 11 test files were chosen for variety, representation of the type of audio our customers transcribe, and public availability. They’re the first files we ran for this post, and the results are representative the tests we ran to evaluate the different transcription APIs. That said, this is a very small test set, and we encourage you to run similar tests on your own audio.

- These speech models are changing regularly. I ran these tests in early December 2017, I’d imagine they won’t be exactly the same within a few months.

Is automatic transcription good enough for *you*?

The answer is generally yes, but it depends on a few things.

First of all, how are you using the transcript? Descript keeps your transcript synced to audio, so if you’re using it as a reference to listen to the audio, then automatic transcription is great because even if there are errors, they don’t matter as much because you can listen to the audio.

If the purpose is to print and read a transcript, then it depends on the quality of your audio — listen to some of the samples posted above to get a sense of how different types of audio performs. In general, broadcast quality recording is ~95% accurate, but an iPhone on the table in a cavernous conference room with five people talking could be 70% or less.

Here are some things we’re doing at Descript to make it easy to decide if automatic transcription works for you.

- Every account includes 30 minutes of free transcription so you can give it a try. And you can earn another 100 minutes for every new user you refer.

- If automatic transcription doesn’t cut it, you can upgrade to White Glove transcription, where humans will transcribe your audio in 24 hours or less for $1 / minute. And we deduct the cost of your automatic transcription, so there’s no risk for trying an automatic transcript first.